My Roles -

🎨 End-to-end Product Design

💼 Project Management

🔍 Market & User Research

For -

Tezign -

A startup building AI-powered creative platforms

With -

Lead Product Manager,

Software & AI Engineers,

Video Marketing Team

Duration -

Quick Glance 👉

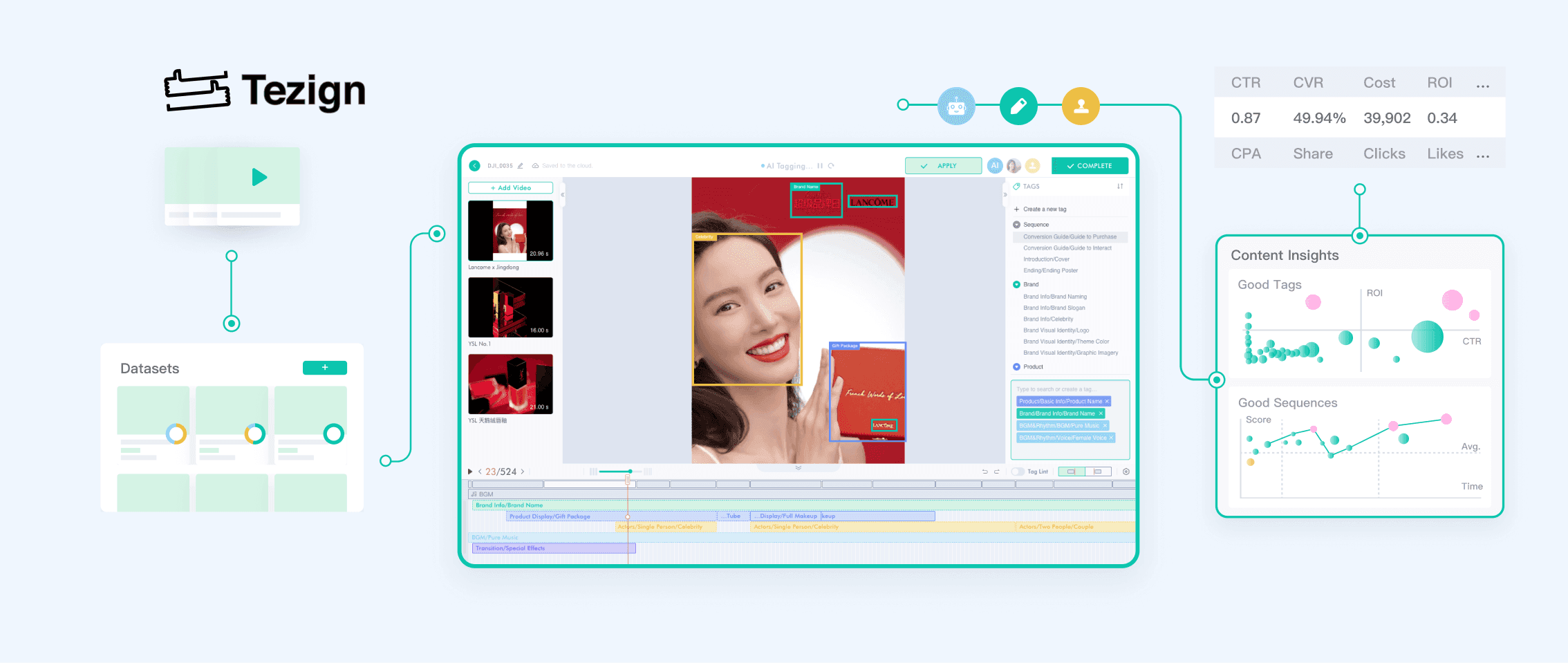

I interned as a Product Designer / Manager at Tezign, a content-tech startup creating next-gen creative content platforms that empower the content creation, optimization, and distribution.

I led the end-to-end design process of a semi-automated video annotation platform in a multi-functional team, which streamlined the original hybrid process in generating product-specific, high-granularity video annotations.

We successfully launched this platform within 3 months, resulting in an 80% reduction in video annotation time costs. Additionally, our platform played a pivotal role in validating the concept of insights-driven video marketing, garnering positive feedback from our top-tier clients like YSL China 🎉.

Key abilities:

Product 0-1

Product Architecture

Agile Prototyping

End-to-End Tool Design

XFN Collaboration

Background.

High-granularity video metadata is essential for high-quality video marketing insights

Videos have emerged as potent marketing tools for top brands, yet the connection between video content and its performance remains a "black box". Tezign's top-tier clients, especially the makeup branding (e.g., YSL China), were eagerly seeking comprehensive insights into marketing video performance. Currently, video insights fall short of meeting these expectations due to the lack of high-granularity video content metadata.

Problem.

Existing tools fail to meet the unique requirements for marketing video annotation

Through initial research, we've learned that current annotation platforms fall short in meeting the following two critical requirements:

Background Research.

Special structure of marketing video annotations

Through cross-team collaboration with content strategists, marketing specialists, and engineers, Tezign has successfully validated the feasibility of a specialized video metadata format aimed at insightful video analysis. To achieve metadata in such format, the annotation process includes temporal-level segmentation and element-level, structured tagging.

Labor-Intensive & Error-Prone Workflow

Through my background research, I discovered that content strategists currently depend on a hybrid workflow across multiple tools to create video annotations in a specialized format.

However, such a workflow is inefficient and undermines the accuracy of the annotations.

Two areas that we can improve:

Through discussions within the cross-functional team, I identified 2 areas that we can improve:

Interviews w/

Stakeholders.

Analyze the existing hybrid workflow and identify gaps between current processes and user expectations

Before diving into building a new platform, I questioned myself:

🤔 Why do users think a new annotation platform is necessary?

🤔 What is the gap between the current condition and users’ desired outcome & user experience?

Therefore, I interviewed 2 content strategists and 3 video annotators, from which I identified unmet or unsatisfactory user needs.

💡 A disorganized collaboration workflow significantly contributes to poor annotation quality and reduced efficiency

After analyzing the current collaboration workflow, I found that content strategists and annotators currently lack an efficient collaboration method for sharing videos, updating tagging structures, reviewing annotation, and merging data.

WorkFlow Design #1.

Streamline the cross-role collaborative workflow and ensure annotation quality

I created the new workflow to streamline the collaboration process and thus ensure the format and quality of annotations. First, it empowers content strategists to directly assign tasks, share videos and tagging structures via this platform. Second, by providing real-time visibility into the ongoing annotation process, they can more effectively oversee and manage quality. Furthermore, following discussions with the team, we decided to incorporate the role of "reviewers" to assess annotations.

🤔 How might we reduce the workload and improve efficiency during the video annotation process?

While this workflow improves quality control, manual annotation remains time-consuming. How might we we enhance efficiency in video annotation?

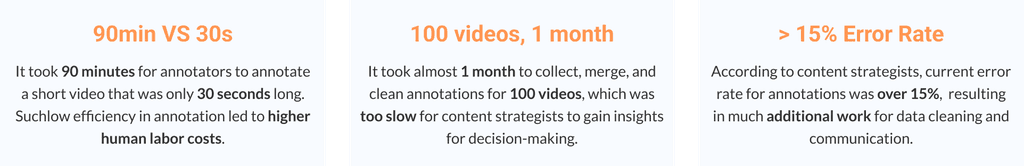

Contextual inquiry.

Digging deeper into annotators' behavioral patterns and cognitive processes

To answer this question, I used contextual inquiry with annotators, observed their behaviors, and asked them to think aloud so that I can gain insights into their cognitive processes.

For example, when segmenting the video for annotation, annotators need to watch the video frame by frame, identify the shot or specific objects in the video, they may also transcribe the video using another tool and look at the transcripts for reference. Such common yet repetitive cognitive and behavioral patterns, suggest the huge potential of integrating AI into the workflow to enhance the efficiency and reduce users' workload.

Technical exploration.

💡 How might we leverage AI to replace or assist human in those repetitive cognitive processes and manual work?

I shared the findings with the team, and collaboratively brainstormed the specific AI/ML techniques we could use to address pain points during the annotation process, improving efficiency and quality. We also evaluated the feasibility and ROI of different techniques, based on which we prioritized scene segmentation, voice to text, OCR, and some other auto-tagging methods, for MVP.

"Though integrating these AI techniques might incur initial technical costs, it's an essential and pivotal step toward establishing the next-gen AI-powered content management platform that augments content production, optimization, and distribution." - Tezign Product Lead

WorkFlow design #2.

A human-AI collaborative annotation workflow

Based on findings from the observation, I then redesigned the annotation workflow with AI annotation integrated to reduce users' workload and thus improve their work efficiency.

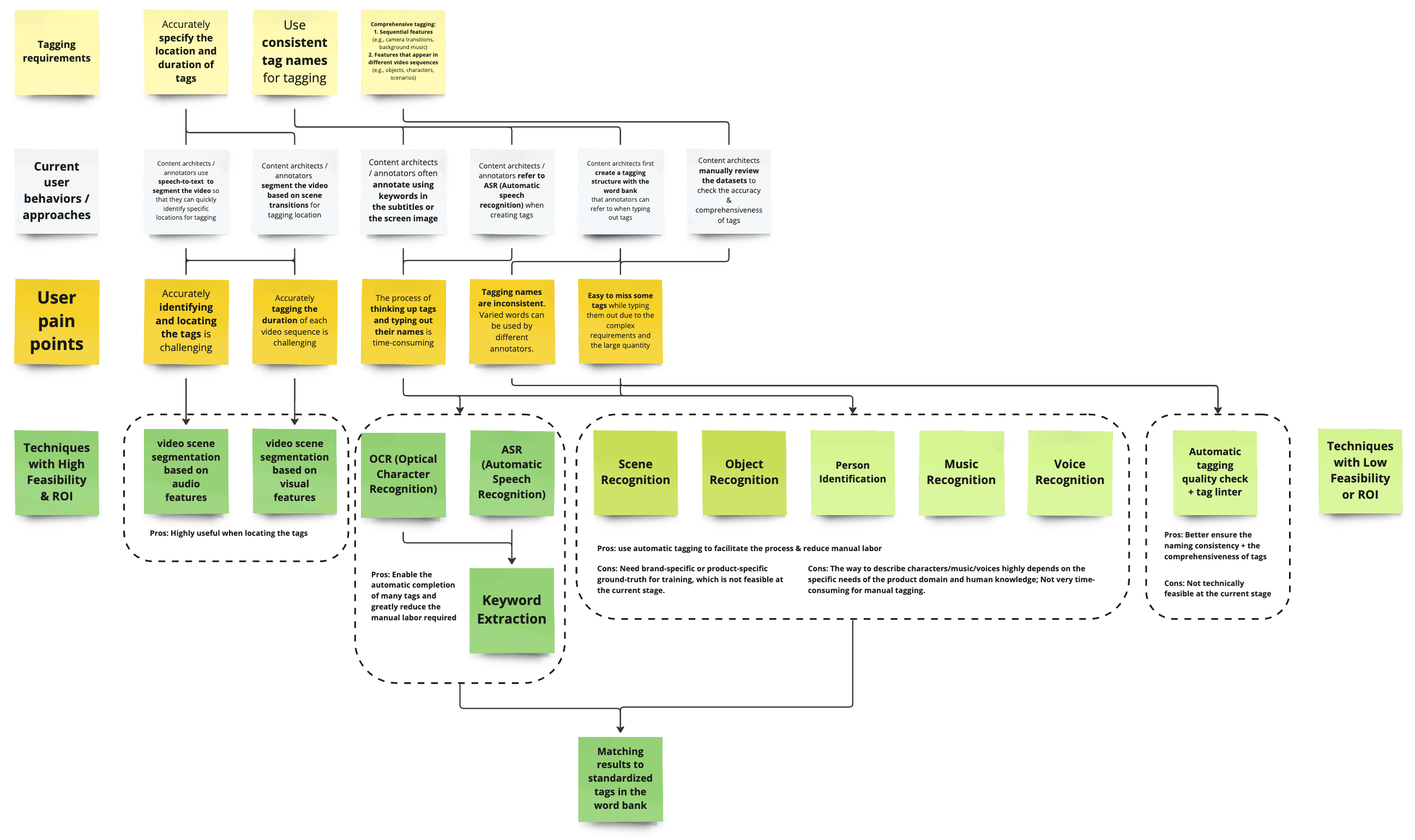

Platform IA.

Organize the information architecture for the new video content analysis platform

I created the product architecture for the new video content analysis platform. After discussing with the product management team, we prioritized 3 core modules for MVP, including the dataset management, tag management, and the annotation tool.

I organized the Information Architecture that structured all the important features as well as detailed information and interactions. I also marked the launching phases by facilitating discussions across teams.

Wireframing -

Core User Flow.

Illustrate the main user flow for our MVP

To ensure a smooth user flow across different features, I created the wireframes to show to main user flow that should be covered in our MVP phase.

Wireframing -

Annotation Tool.

Illustrate the layout of the annotation tool

When designing the layout of the annotation tool, I researched into video editing tools, as they similarly break videos down into different "layers" (e.g., visual, audio, text, transcripts). I applied a similar structure to ensure the tool would be familiar and easy to use for users. During concept testing, the approach received positive feedback.

Iteration Process.

This section will highlight key iterations I made to the interactions for video annotation.

Segmentation.

Facilitate efficient and smooth temporal segmentation

To add a temporal annotation to the video, the first step is to identify where to segment the video. Supporting smooth segmentation is vital to annotation quality and efficiency. Here are some key iterations I made based on usability testing results:

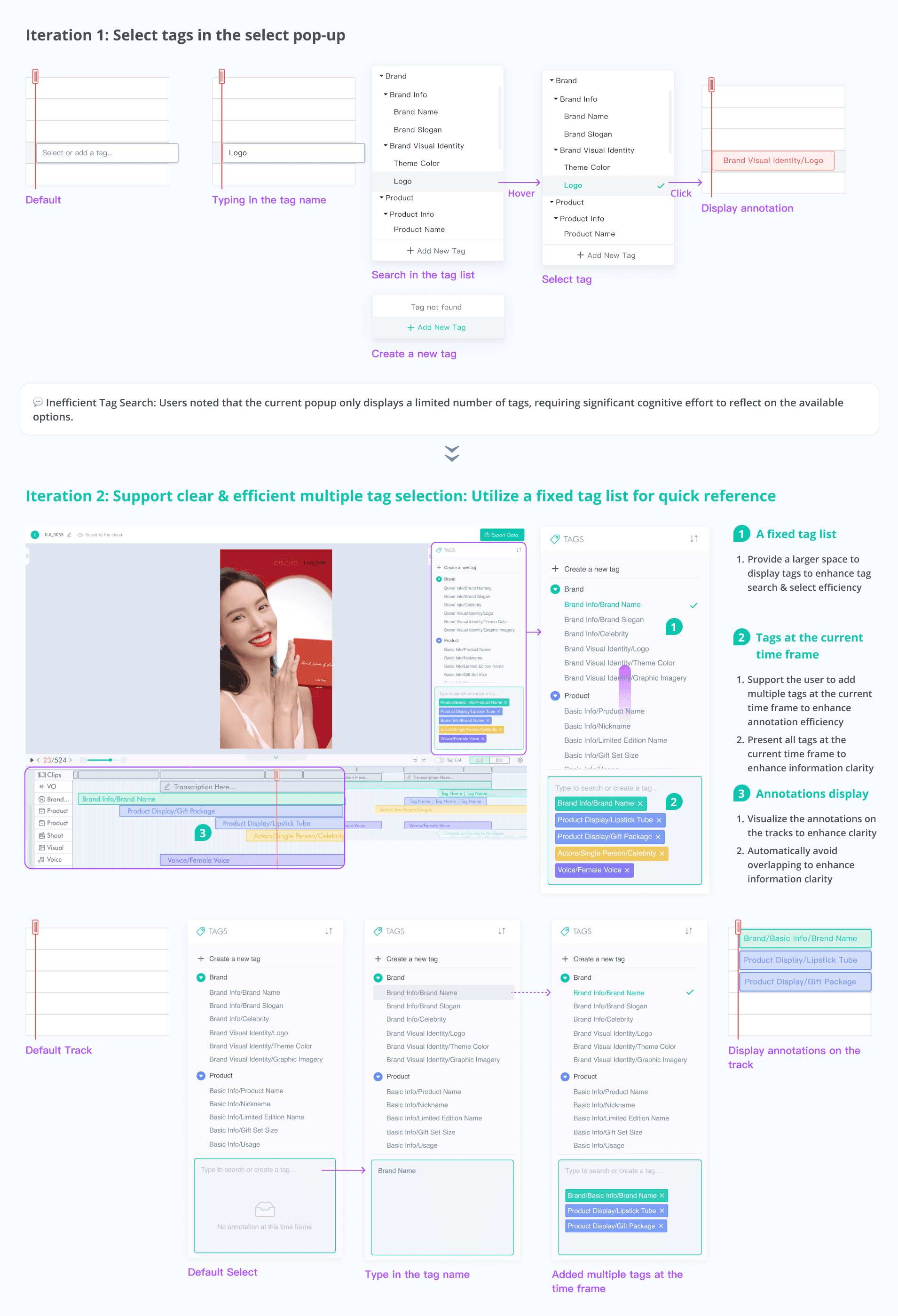

Tag Selection.

Enhance clarity and efficiency in tag search & selection

After segmentation, annotators need to select tags for that segment. I iterated the interactions after agile development and usability testing:

Step1. Import Data

Now: Content strategists can use the centralized platform to manage datasets and monitor the ongoing annotation process in real-time.

Before: Previously, content strategists had to share videos with annotators through shared folders, resulting in disorganized data and task management.

Step2. AI Pre-Annotation

Now: AI automates segmentations through screen transitions and transcripts, aiding annotators with precise and efficient annotation. Additionally, utilizing ML models like product, scene, and music recognition, the system automatically finishes some annotations.

Before: Previously annotators had to watch the video and manually locate the segments. The manual annotation process was very labor intensive and time-consuming.

Step3. Manual Annotation

Now: Annotators can use AI segments and shortcuts to quickly locate the segments. The fixed tag list makes it easier to search and add tags.

Before: Locating annotations or adding tags imposes significant cognitive burden and demands extensive manual effort.

🚀 Improvement in annotation efficiency and quality

The new platform reduced annotation time for a 30s video from 90min to 15min. Besides, the error rate significantly decreased from 15% to about 5%.

🫶 Video insights gained recognition from top-tier clients

We successfully used our own platform to generate 10,000+ annotations within 2 weeks after the launch of the MVP. Based on which, we pitched the video insights report for YSL China and Lancome China. The concept of video tagging for insights generation gained recognition from these top-tier clients!

What I learned.

🧠 Push myself to think from a higher level

At Tezign, being part of a young startup granted me the freedom to delve into various fields like research, design, and product management. I was fortunate to collaborate with supportive colleagues who encouraged exploration. This experience taught me the importance of a higher-level problem-solving approach—questioning the 'why' behind choosing A over B, timing considerations, aligning design approaches with business goals, and evaluating implementation ROI. Collaborating across teams enriched my perspective on these critical aspects.

🧪 Agile prototyping and testing for intricate interactions

The tool design involves intricate and flexible interactions, such as dragging and pressing "Enter," which are challenging to test using standard design tools. Additionally, ensuring technical feasibility for these interactions can be difficult. To address this, I’ve learned to collaborate closely with engineers to build beta versions for agile testing. This agile, iterative approach not only improves the effectiveness of design in real-world testing but also ensures that the designs are technically feasible.

💖 The beauty of building tools

I came to admire the beauty and joy in creating elegant and natural interactions for tools. This project has taught me to do more than just observe users' behaviors, but also delve into the intricacies of their cognitive processes to synthesize behavioral / thinking patterns, and then create tool interactions that not just satisfy their needs, but also anticipate them.

further Thoughts.

🧘♀️ Art of data, science of content

I questioned myself: Can we truly decode unstructured, creative content with semantic depth? Can data genuinely guide us in creating exceptional creative content? I am still enthusiastically exploring the realm of utilizing data and AI to comprehend creative content, extract insights, and facilitate content generation. While currently our primary assessment of video quality relies on business metrics such as ROI, Clickthrough Rate, Conversion Rate, etc, I anticipate that in the future we will have a more comprehensive approach to evaluate videos, ultimately enhancing video marketing strategies.